A generative AI-centric proposal that was released this week is worthy of attention. According to sponsors Sens. Chris Coons (D-DE), Marsha Blackburn (R-TN), Amy Klobuchar (D-MN), and Thom Tillis (R-NC), the Nurture Originals, Foster Art, and Keep Entertainment Safe (“NO FAKES”) Act of 2023 “would protect the voice and visual likeness of all individuals from unauthorized recreations from generative AI.” In a one-pager, the senators state that the NO FAKES Act aims to address “the use of non-consensual digital replications in … audiovisual works or sound recordings,” such as the unauthorized use of Drake and The Weeknd’s voices in the song “Heart on My Sleeve,” and more recently, the use of an AI-generated deepfake of Tom Hanks in ads for a dental plan that he is not affiliated with.

Specifically, the draft legislation would …

– Hold individuals or companies liable if they produce an unauthorized digital replica of an individual in a performance;

– Hold platforms liable for hosting an unauthorized digital replica if the platform has knowledge of the fact that the replica was not authorized by the individual depicted; and

– Exclude certain digital replicas from coverage based on recognized First Amendment protections, namely, news, documentary, parody, etc.

In short: The bill would give rise to a federal right of publicity, a legal right designed to protect individuals’ names and likenesses against unauthorized exploitation for commercial purposes.

This is not a surprising proposal. In fact, a federal right of publicity statute is an issue that Sen. Coons, for one, has been raising in connection with AI-specific hearings hosted by the Senate Judiciary Committee’s Subcommittee on Intellectual Property, which he chairs. While about half of U.S. states have recognized the right of publicity, a lack of uniformity among such state laws – and the resulting unpredictability – has prompted renewed calls for a federal statute in light of the rapid rise of deepfakes.

“While some have long been calling for a statute establishing a federal right of publicity, the advent of generative artificial intelligence has brought new purchase to this argument,” Mintz’s Bruce Sokler, Alexander Hecht, Christian Tamotsu Fjeld, and Raj Gambhir stated in a note this summer. Sen. Coons, in particular, asserted during the Subcommittee on IP’s hearing on AI and copyright in July that establishing a federal right of publicity may be “necessary to strike the right balance between creators’ rights and AI’s ability to enhance innovation and creativity.”

As we dove into at length here, others, ranging from the Copyright Office to Adobe’s general counsel, have raised the issue of a federal right of publicity in connection with generative AI.

Back to the NO FAKES Act … the bill, which was “released as a discussion draft,” would generally prevent the “production of a digital replica without consent of the applicable individual or rights holder” and such a right would apply throughout an individual’s lifetime and then for 70 years after their death.

>> If you’re looking for a deeper dive, University of Pennsylvania law professor (and right of publicity expert) Jennifer Rothman submitted some “considerations for federal right of publicity and digital impersonation legislation” to the IP Subcommittee. At a high level, she stated that some of the many issues that Congress would need to consider with any such draft legislation include: “(1) Its interplay with existing state right of publicity laws; (2) The treatment of ordinary people; (3) Limiting the transferability of such a newly-created right; (4) First Amendment and Free Speech limits; (5) Potential Conflicts with Copyright; and (6) Intermediary Liability and Section 230. Rothman’s submission can be found here.

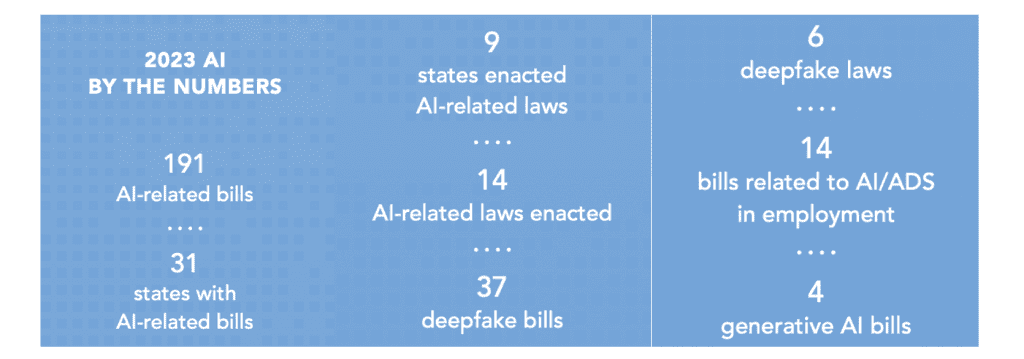

THE BIGGER PICTURE: AI continues to be top of mind for federal and state lawmakers, who has introduced 191 bills related to AI as of September 21, 2023, according to data from software industry alliance BSA. (You can find our AI Legislation Tracker here.) This makes for a 440 percent year-over-year increase in AI-specific bills, most of which are aimed at “regulating specific AI use cases, requiring AI governance frameworks, creating inventories of states’ uses of AI, establishing task forces and committees, and addressing the state governments’ AI use.”

A few key findings from the BSA’s 2023 State AI Legislation Summary …

– Despite the 440% increase in bill introductions, only 29 bills (15 percent) passed at least one legislative chamber, and only 14 of those became law. BSA anticipates that the volume of AI bills will increase and the likelihood of bill passages will also increase;

– Connecticut, Florida, Illinois, Louisiana, Minnesota, Montana, Texas, Virginia, and Washington all passed AI legislation. California enacted legislation to conduct a survey of the state’s use of high-risk AI;

– Most enacted bills were related to deepfakes, government’s AI use, including law enforcement, and task forces/committees;

– Deepfake bills increased by 50 percent from last year. About 16 percent of deepfake bills were enacted, one of the highest for any BSA- tracked issue. All the deepfake bills introduced in 2023 were related to specific topics, such as sexually explicit or political material; and

– Municipal interest in AI surged, too, as Boston, Miami, New York City, San Jose (CA), and Seattle all created regulations and guidelines on various aspects of AI, including generative AI, automated employment decision systems, and impact assessments. The National Association of Counties, especially, is taking a proactive approach to leading on AI policy.